How it occurs

-

User testing

One place we can anticipate it showing up throughout the design process is during user testing. For example, focusing on test findings that validate the desired outcome.

-

Design feedback

Similar to survivorship bias, the best design is that which considers a diversity of perspectives, specifically in regards to the people who will be using it. This is also an important consideration with confirmation bias, where we can block out or become more critical when receiving feedback that doesn’t validate or support the design direction we’d prefer.

User feedback is great for understanding user thinking, but not great for understanding their actions.

How to avoid

-

Multi-faceted user research

You’ve probably heard the saying “pay attention to what users do, not what they say”. User actions and feedback is seldomly aligned, and this can introduce inaccurate data in the design process if we rely too much on user feedback alone. User feedback is great for understanding user thinking, but not great for understanding their actions. One way to combat the confirmation bias is a multi-faceted approach to user research. Using a combination of user interviews, usability testing, and quantitative analysis to understand peoples’ actions will help to avoid the bias that comes with overly relying on any single method.

-

Red team, blue team

Another effective approach to combating confirmation bias is to designate a separate team (the red team) to pick apart a design and find the flaws. In his book Designing for Cognitive Bias, David Dylan Thomas points out the effectiveness of an exercise called ‘Red Team, Blue Team’ in which the red team seeks to uncover “very little unseen flaw, every overlooked potential for harm, every more elegant solution that the blue team missed because they were so in love with their initial idea”. This can be both an efficient way to quickly discover the bias embedded into the design output of the team and avoid the pitfalls of falling in love with the wrong idea.

-

Watch for overly optimistic interpretation of data

A critical eye is necessary during design research. We must be diligent not to interpret data the wrong way, especially if the interpretation favors the desired outcome. When we watch for an overly optimistic interpretation of research findings or design feedback, we can avoid confirming a preexisting bias towards a specific option or approach.

Case study

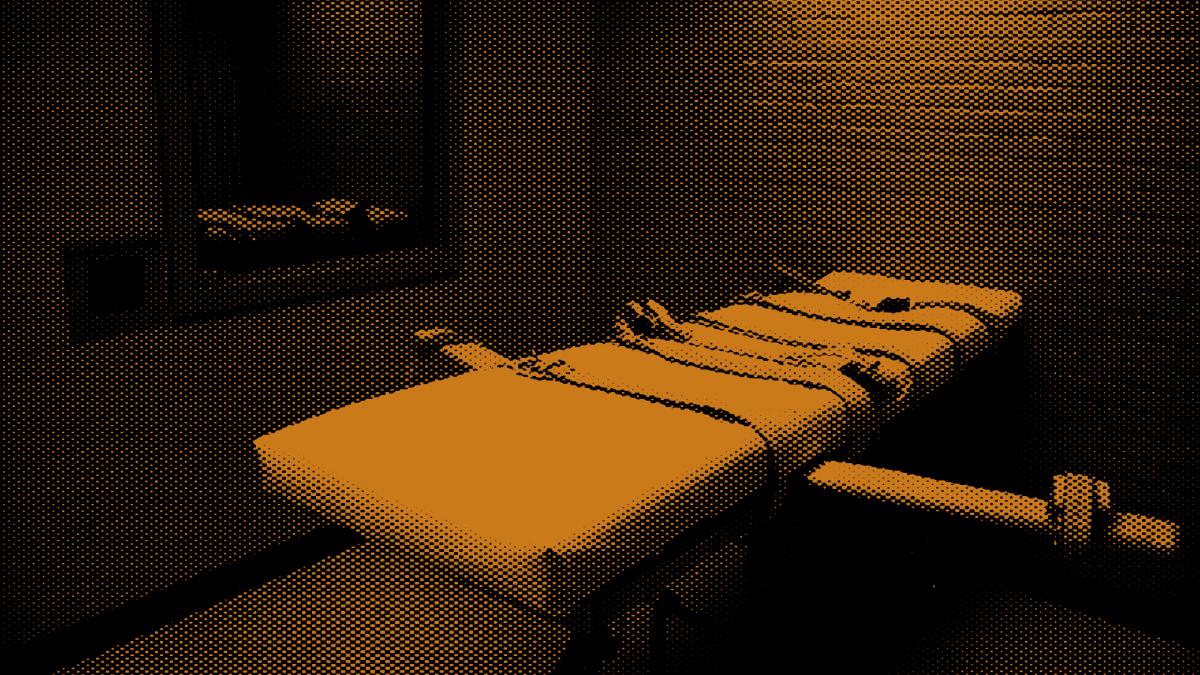

Biased Assimilation and Attitude Polarization: The Effects of Prior Theories on Subsequently Considered Evidence

People who hold strong opinions on complex social issues are likely to examine relevant empirical evidence in a biased manner. As a result, they will accept confirming evidence at face value while critically examining evidence that disconfirms their opinions. This was the conclusion of a study conducted in 1979 at Stanford University concerning capital punishment.3 In the study, each participant read a comparison of U.S. states with and without the death penalty, and then a comparison of murder rates in a state before and after the introduction of the death penalty. The participants were asked whether their opinions had changed. Next, they read a more detailed account of each comparison’s procedure and had to rate whether the research was well-conducted and convincing. Participants were told that one comparison supported the deterrent effect and the other comparison undermined it, while for other participants the conclusions were swapped. In fact, both comparisons were fictional.

The participants, whether supporters or opponents of capital punishment, reported shifting their attitudes slightly in the direction of the first comparison they read. After reading the more detailed descriptions of the two comparisons, almost all returned to their original belief regardless of the evidence provided, pointing to details that supported their viewpoint and disregarding anything contrary. The results illustrated that people set higher standards of evidence for hypotheses that go against their current expectations.

We tend to search for, interpret, favor, and recall information in a way that confirms or supports our prior beliefs or values. This tendency is known as confirmation bias, and when we aren’t careful it can also show up during the design process as well.