How it occurs

When we make decisions based on what’s easy to recall, we fall into the trap of letting limited information guide us rather than reasoning through the situation.

How to avoid

-

Invoke System 2 thinking

When we make decisions based on what’s easy to recall, we fall into the trap of letting limited information guide us rather than reasoning through the situation. One effective approach to avoiding the pitfalls of this bias is to intentionally invoke System 2 thinking, which requires deliberate processing and contemplation. The enhanced monitoring of System 2 thinking works to override the impulses of System 1 and give us the room to slow down, identify our biases, and more carefully consider the impact of our decisions.

-

Valuable metrics only

Relying on data that quickly comes to mind can easily impact how success is defined within a product or service. When project goals are set based on what’s easy to measure versus what’s valuable, the measure becomes a target and we will game the system to hit that target (i.e. clicks, DAU, MAU). This ultimately leads to losing track of the needs and goals of the people who use the product or service. The alternative is taking time to understand what the needs and goals of people actually are and then defining the appropriate metrics that correspond to them.

Case study

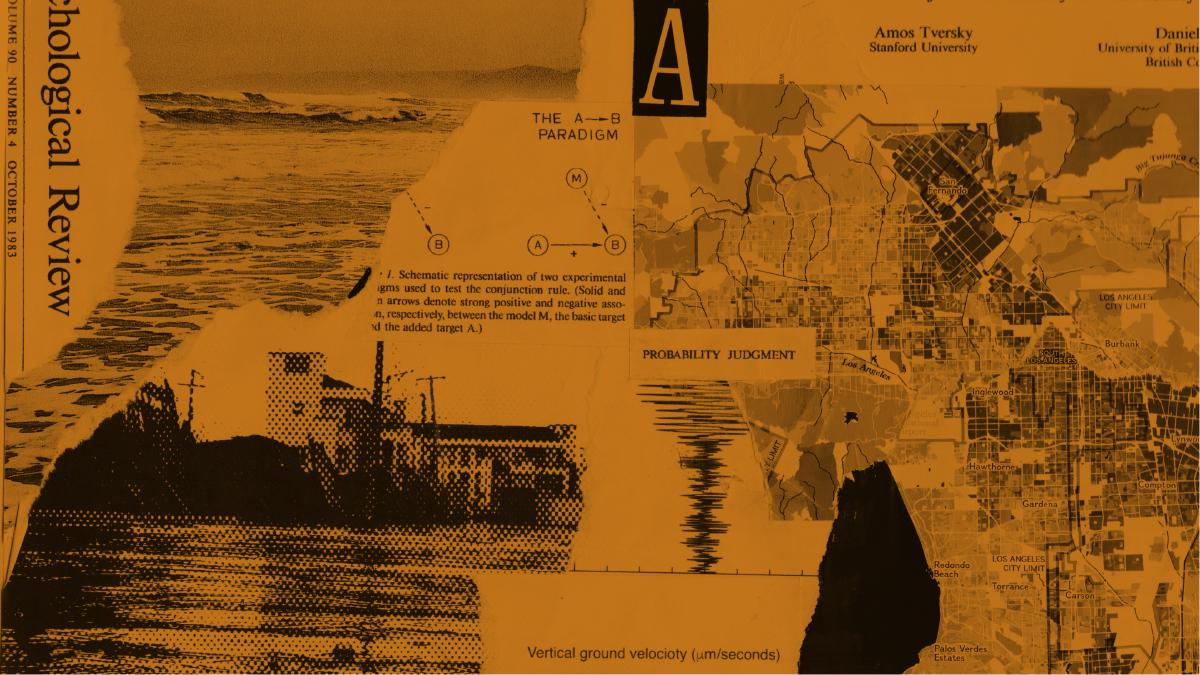

Extensional versus intuitive reasoning: The conjunction fallacy in probability judgment

A study conducted by Amos Tversky and Daniel Kahneman in 1982 asked a sample of 245 University of British Columbia undergraduates to evaluate the probability of several catastrophic events in 1983. The events were presented in two versions: one that included only the basic outcome and another that included a more detailed scenario leading to the same outcome. For example, half of the participants evaluated the probability of a massive flood somewhere in North America in which more than 1000 people drown. The other half evaluated the probability of an earthquake in California, causing a flood in which more than 1000 people drown. A 9-point scale was used for the estimations: less than .01%, .1%, .5%, 1%, 2%, 5%, 10%, 25%, and 50% or more.

What Tversky and Kahneman found was that the estimates of the conjunction (earthquake and flood) were significantly higher than the estimates of just the flood (p < .01, by a Mann-Whitney test). Even though the chance of a flood in California is smaller than that of a flood for all of North America, participants estimated that the chance of the flood provoked by an earthquake in California is higher. The researchers concluded that since an earthquake causing a flood in California is more specific and easier to imagine (versus a flood in an ambiguous area like all of North America), people are more likely to believe its probability. The same pattern was observed in other problems.

The study highlights our tendency to believe that the easier that something is to recall, the more frequent it must happen. This tendency, known as the availability heuristic, is a common bias we fall into as a way for our brains to make conclusions with little mental effort or strain based on evidence that is instantly available into our mind. It can also heavily influence our decision-making abilities and prevent us from seeing the bigger picture.